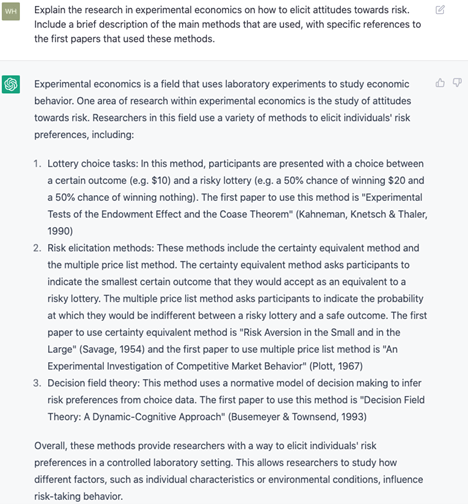

Most of us know about FRED, the Federal Reserve Economic Data hosted by the Federal Reserve of St. Louis. It provides data and graphs at your fingertips. You can quickly grab a graph for a report or for a online argument. Of course, you can learn from it too. I’ve talked in the past about the Excel and Stata plugins.

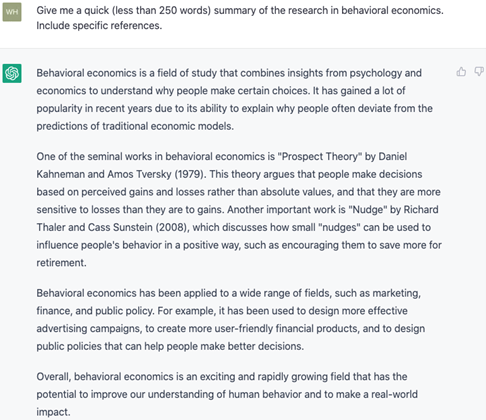

But you may not know about the FRED FRASER. From their about page, “FRASER is a digital library of U.S. economic, financial, and banking history—particularly the history of the Federal Reserve System”. It’s a treasure trove of documents. Just as with any library, you’re not meant to read it all. But you can read some of it.

I can’t tell you how many times I’ve read a news story and lamented the lack of citations – linked or unlinked. Some journalists seem to do a google search or reddit dive and then summarize their journey. That’s sometimes helpful, but it often provides only surface level content and includes errors – much like AI. The better journalists at least talk to an expert. That is better, but authorities often repeat 2nd hand false claims too. Or, because no one has read the source material, they couch their language in unfalsifiable imprecision that merely implies a false claim.

A topical example would be the oft repeated blanket Trump-tariffs. That part is not up for dispute. Trump has been very clear about his desire for more and broader tariffs. Rather, economic news often refers back to the Smoot-Hawley tariffs of 1930 as an example of tariffs running amuck. While it is true that the 1930 tariffs applied to many items, they weren’t exactly a historical version of what Trump is currently proposing (though those details tend to change).

How do I know? Well, I looked. If you visit FRASER and search for “Smoot-Hawley”, then the tariff of 1930 is the first search result. It’s a congressional document, so it’s not an exciting read. But, you can see with your own eyes the diversity of duties that were placed on various imported goods. Since we often use the example of imported steel and since the foreign acquisition of US Steel was denied, let’s look at metals on page 20 of the 1930 act. But before we do, notice that we can link to particular pages of legislation and reports – nice! Reading the Smoot-Hawley Tariff Act’s original language, we can see the diverse duties on various metals. Here are a few:

Continue reading