I’m getting ready to give some public local talks about AI. Last week I shared some pictures that I think might help people understand ChatGPT, specifically:

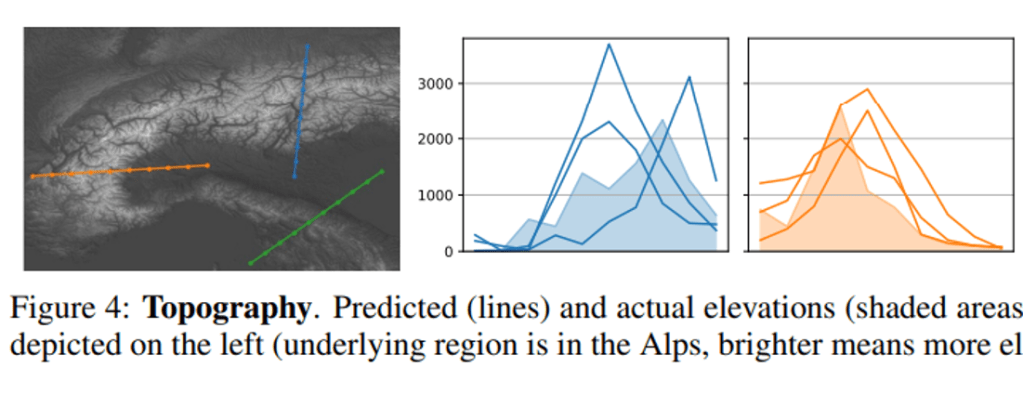

My first thought is that GPT-4 was giving incorrect estimates of the heights of these mountains because it does not actually “know” the correct elevations. But then a nagging question came to mind.

GPT has a “creativity parameter.” Sometimes, it intentionally does not select the top-rated next word in a sentence, for example, in order to avoid being stiff and boring. Could GPT-4 know the exact elevation of these mountains, and it is just intentionally being “creative,” in this case?

I do not want to stand up in front of the local Rotary Club and say something wrong. So, I went to a true expert, Lenny Bogdonoff, to ask for help. Here is his reply:

Not quite. It’s not that it knows or doesn’t know, but based on the prompt, it’s likely unable to parse the specific details and is outputting results respectively. There is a component of stochastic behavior based on what part of the model weights are activated.

One common practice to help avoid this and see what the model does grasp, is to ask it to think step by step, and explain its reasoning. When doing this, you can see the fault in logic.

All that being said, the vision model is actually faulty in being able to grasp the relative position of information, so this kind of task will be more likely to hallucinate.

There are better vision models, that aren’t OpenAI based. For example Qwen-VL-Max is very good, from the Chinese company Alibaba. Another is LLaVA which uses different baselines of open source language models to add vision capabilities

Depending on what you are needing vision for, models can be spiky in capability. Good at OCR but bad at relative positioning. Good at classifying a specific UI element, but bad at detecting plants, etc etc.

Joy: So, I think I can tell the Rotary Club that GPT was “wrong” as opposed to “intentionally creative.” I think, as I originally concluded, you should not make ChatGPT the pilot of your airplane and go to sleep when approaching the Alps. ChatGPT should be used for what it is good at, such as writing the rough draft of a cover letter. (We have great “autopilot” software for flying planes, already, without involving large language models.)

Another expert, Gavin Leech, also weighed in with some helpful background information:

- the creativity parameter is known as temperature. But you can actually radically change the output (intelligence, style, creativity) by using more complicated sampling schemes. The best analogy for changing the sampling scheme is that you’re giving it a psychiatric drug. Changing the prompt, conversely, is like CBT or one of those cute mindset interventions.

- For each real-name model (e.g. “gpt-4-0613”), there’s 3 versions: the base model (which now no one except highly vetted researchers have access to), the instruction-tuned model, and the RLHF (or rather RLAIF) model. The base model is wildly creative, unhinged, but the RLHF one (which the linked researchers use) is heavily electroshocked into not intentionally making things up (as Lenny says).

- It’s currently not usually possible to diagnose an error – the proverbial black box. My friends are working on this though

- For more, note OpenAI admitting the “laziness” of their own models. the Turbo model line is intended to fix this.

Thank you, Lenny and Gavin, for donating your insights.

https://marginalrevolution.com/marginalrevolution/2024/04/gpt-4-turbo-still-doesnt-answer-this-question-well.html ”Telling it to “reason step by step” is no panacea either. And if you want to make it harder, ask for more than three people… “

LikeLike