ChatGPT and related AI have been all the rage these past few months. Among other things, “AI” became the shiny object that companies have dangled before investors, rocketing upward the shares of the “Magnificent Seven” large tech stocks.

However, a recent poll by computer security firm Malwarebytes notes a marked turn in the public’s attitude towards these products:

It seems the lustre of the chatbot-that’s-going-to-change-everything is starting to fade….

When people explored its capabilities in the days and weeks after its launch, it seemed almost miraculous—a wonder tool that could do everything from creating computer programs and replacing search engines, to writing students’ essays and penning punk rock songs. Its release kick-started a race to disrupt everything with AI, and integrate ChatGPT-like interfaces into every conceivable tech product.

But those that know the hype cycle know that the Peak of Inflated Expectations is quickly followed by the Trough of Disillusionment. Predictably, ChatGPT’s rapid ascent was met by an equally rapid backlash as its shortcomings became apparent….

A new survey by Malwarebytes exposes deep reservations about ChatGPT, with optimism in startlingly short supply. Of the respondents familiar with ChatGPT:

- 81% were concerned about possible security and safety risks.

- 63% don’t trust the information it produces.

- 51% would like to see work on it paused so regulations can catch up.

The concerns expressed in the survey mirror the trajectory of the news about ChatGPT since its introduction in November 2022.

As EWED’s own Joy Buchanan has been pointing out specifically with regard to citations for research papers (here, here, and in the Wall Street Journal), ChatGPT tends to “hallucinate”, i.e., to report things that are not simply true. In the recent working paper “GPT-3.5 Hallucinates Nonexistent Citations: Evidence from Economics” , she warns of the possibility of a vicious spiral of burgeoning falsehoods, where AI-generated errors which are introduced into internet content such as research papers are then picked up as “learning” input into the next generation of AI training.

Real-world consequences of ChatGPT’s hallucinations are starting to crop up. A lawyer has found himself in deep trouble after filing an error-ridden submission in an active court case. Evidently his assistant, also an attorney, relied on ChatGPT which came up with a raft of “citations to non-existent cases.” Oops.

And now we have what is believed to be “the first defamation lawsuit against artificial intelligence.” Talk show host Mark Walters filed a complaint in Georgia which is:

…asking for a jury trial to assess damages after ChatGPT produced a false complaint to a journalist about the radio host. The faux lawsuit claimed that Mr. Walters, the CEO of CCW Broadcast Media, worked for a gun rights group as treasurer and embezzled funds.

…Legal scholars have split on whether the bots should be sued for defamation or under product liability, given it’s a machine — not a person — spreading the false, hurtful information about people.

The issue arose when an Australian mayor threatened to sue the AI company this year over providing false news reports that he was guilty of a foreign bribery scandal.

Wow.

Thousands of AI experts and others have signed an open letter asking: “Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization?”. The letter states that “Such decisions must not be delegated to unelected tech leaders. Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable.” It therefore urges “all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4…If such a pause cannot be enacted quickly, governments should step in and institute a moratorium…AI labs and independent experts should use this pause to jointly develop and implement a set of shared safety protocols for advanced AI design and development that are rigorously audited and overseen by independent outside experts.”

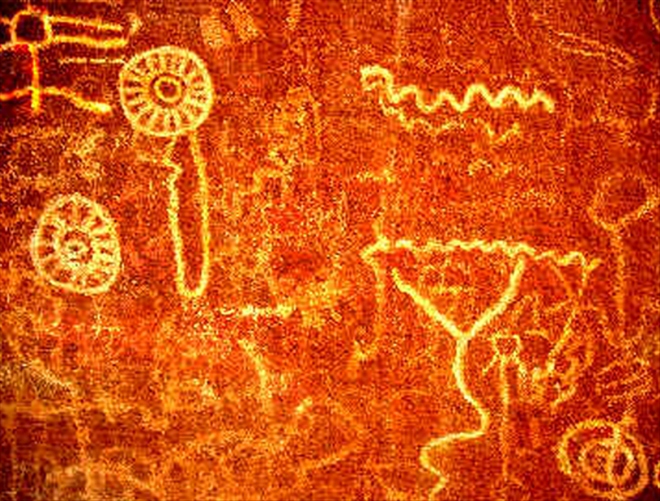

Oh, the irony. There is reason to believe that our Stone Age ancestors were creating unreal images like this:

whilst stoned on peyote, shrooms, or oxygen deprivation. And here we are in 2023, with the cutting edge in information technology, running on the fastest specially-fabricated computing devices, and we get…hallucinations.