This is a transcript of Lex Fridman Podcast #419 with Sam Altman 2. Sam Altman is (once again) the CEO of OpenAI and a leading figure in artificial intelligence. Two parts of the conversation stood out to me, and I don’t mean the gossip or the AGI predictions. The links in the transcript will take you to a YouTube video of the interview.

(00:53:22) You mentioned this collaboration. I’m not sure where the magic is, if it’s in here or if it’s in there or if it’s somewhere in between. I’m not sure. But one of the things that concerns me for knowledge task when I start with GPT is I’ll usually have to do fact checking after, like check that it didn’t come up with fake stuff. How do you figure that out that GPT can come up with fake stuff that sounds really convincing? So how do you ground it in truth?

Sam Altman(00:53:55) That’s obviously an area of intense interest for us. I think it’s going to get a lot better with upcoming versions, but we’ll have to continue to work on it and we’re not going to have it all solved this year.

Lex Fridman(00:54:07) Well the scary thing is, as it gets better, you’ll start not doing the fact checking more and more, right?

Sam Altman(00:54:15) I’m of two minds about that. I think people are much more sophisticated users of technology than we often give them credit for.

Lex Fridman(00:54:15) Sure.

Sam Altman(00:54:21) And people seem to really understand that GPT, any of these models hallucinate some of the time. And if it’s mission-critical, you got to check it.

Lex Fridman(00:54:27) Except journalists don’t seem to understand that. I’ve seen journalists half-assedly just using GPT-4. It’s-

Sam Altman(00:54:34) Of the long list of things I’d like to dunk on journalists for, this is not my top criticism of them.

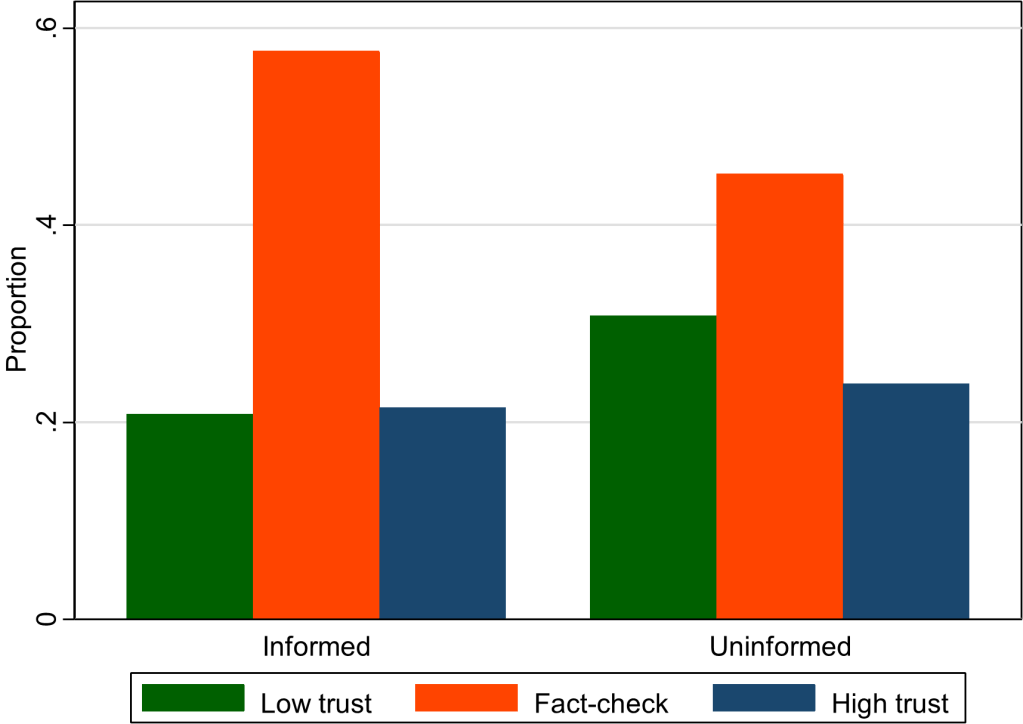

As EWED readers know, I have a paper about ChatGPT hallucinations and a paper about ChatGPT fact-checking. Lex is concerned that fact-checking will stop if the quality of ChatGPT goes up, even though no one really expects the hallucination rate to go to zero. Sam takes the optimistic view that humans will use the tool well. I suppose that Altman generally holds the view that his creation is going to be used for good, on net. Or maybe he is just being a salesman who does not want to publicly dwell on the negative aspects of ChatGPT.

I also have written about the tech pipeline and what makes people shy away from computer programming.

Lex Fridman(01:29:53) That’s a weird feeling. Even with a programming, when you’re programming and you say something, or just the completion that GPT might do, it’s just such a good feeling when it got you, what you’re thinking about. And I look forward to getting you even better. On the programming front, looking out into the future, how much programming do you think humans will be doing 5, 10 years from now?

Sam Altman(01:30:19) I mean, a lot, but I think it’ll be in a very different shape. Maybe some people will program entirely in natural language.

Someday, the skills of a computer programmer might morph to be closer to the skills of a manager of humans, since LLMs were trained on human writing.

In my 2023 talk, I suggested that programming will get more fun because LLMs will do the tedious parts. I also suggest that parents should teach their kids to read instead of “code.”

The tedious coding tasks previously done by humans did “create jobs.” I am not worried about mass unemployment yet. We have so many problems to solve (see my growing to-do list for intelligence). There are big transitions coming up. Sama says GPT-5 will be a major step up. He claimed that one reason OpenAI keeps releasing intermediate models is to give humanity a heads up on what is coming down the line.