Joy writes: I read Co-Intelligence by Ethan Mollick (thanks to Samford for the free book). Most of it is old news for those of us who follow Ethan on social media and use ChatGPT. However, something that stood out to me was his mention of a study in which humans decide to give themselves a painful shock rather than sit alone in silence for 15 minutes.

Claude comments further based on my prompt:

The End of Boredom: How AI Companions Might Reduce Random Violence

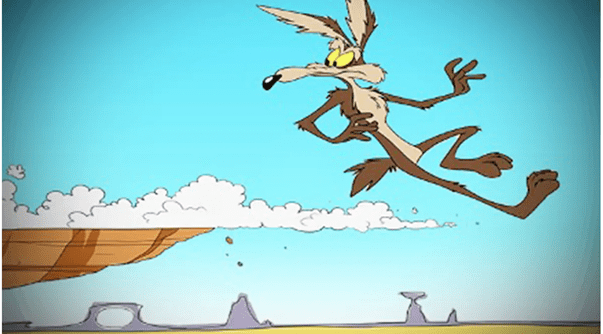

Remember that study where people would rather shock themselves than sit alone with their thoughts? Ethan Mollick references it in Co-Intelligence, and it reveals something unsettling: 67% of men and 25% of women chose electric shocks over sitting quietly for just 15 minutes.

Here’s a strange thought—what if our AI-saturated future accidentally reduces certain types of violence simply by eliminating boredom?

The Violence-Boredom Connection

“Idle hands are the devil’s workshop” exists for a reason. Research has long linked boredom to risk-taking, substance abuse, and impulsive violence—the opportunistic kind that emerges from restlessness rather than planning. Young men starting fights on street corners, vandalism on summer nights, the restless energy that sometimes turns destructive—much of this stems from the unbearable weight of having nothing to do.

Enter Infinite Engagement

We’re rapidly approaching a world where boredom might become extinct. AI companions are becoming always available, infinitely patient, endlessly novel, and perfectly tailored to individual interests. Your future AI won’t just answer questions—it will debate philosophy at 3 AM, create personalized games, generate stories with you as the protagonist, or help explore any curiosity rabbit hole.

The cognitive void that once led people to shock themselves rather than think? It might simply cease to exist.

The Unexpected Benefits

Consider the implications: Young men who might have started fights out of restlessness could instead be deeply engaged in AI-assisted music production or coding projects. The same restless energy that manifests destructively could be channeled into creative collaboration.

AI companions could help process frustration before it builds to a breaking point—imagine having an infinitely patient listener during those dangerous 2 AM spirals of rage. While not replacing human connection, AI interaction might buffer the worst effects of isolation that can lead to radicalization.

The Dark Side

This isn’t utopian. An always-engaged society raises concerns: What happens to human relationships when AI companionship is always easier? Does constant stimulation atrophy our ability to self-reflect? Might we lose the creative insights that emerge from boredom?

Crucially, this would only address impulsive, boredom-driven violence. Systemic violence, ideological extremism, and deeper social problems won’t disappear because people have engaging AI companions.

A Strange New World

For the first time in history, boredom—that uncomfortable void that has driven both creativity and destruction—might become optional. The same species that chose shocks over silence is about to get exactly what it wanted: constant, meaningful stimulation.

Whether this leads to a more peaceful society or new problems we haven’t imagined remains to be seen. The question is whether we’ll recognize what we’ve lost only after it’s gone.

The experiment is already underway—we’re all participating in it.

Joy comments at the end: The AI-written essay is willing to explore downsides of AI engagement.