Everyone who has not been living under a rock this year has heard the buzz around ChatGPT and generative AI. However, not everyone may have clear definitions in mind, or understanding of how this stuff works.

Artificial intelligence (AI) has been around in one form or another for decades. Computers have long been used to analyze information and come up with actionable answers. Classically, computer output has been in the form of numbers or graphical representation of numbers. Or perhaps in the form of chess moves, beating all human opponents since about 2000.

Generative AI is able to “generate” a variety of novel content, such as images, video, music, speech, text, software code and product designs, with quality which is difficult to distinguish from human-produced content. This mimicry of human content creation is enabled by having the AI programs analyze reams and reams of existing content (“training data”), using enormous computing power.

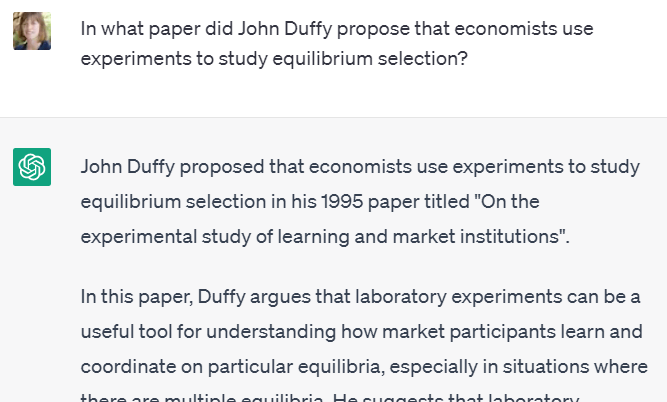

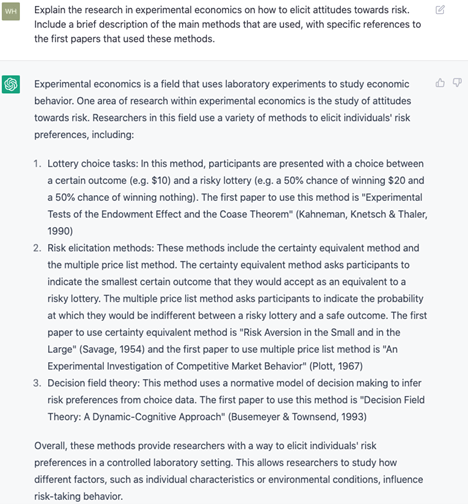

I wanted to excerpt here a fine article I just saw which is informative on this subject. Among other things, it lists some examples of gen-AI products, and describes the “transformer” model that underpins many of these products. I skipped the section of the article that discusses the potential dangers of gen-AI (e.g., problems with false “hallucinations”), since that topic has been treated already in this blog.

Between this article and the Wikipedia article on Generative artificial intelligence , you should be able to hold your own, or at least ask intelligent questions, when the subject next comes up in your professional life (which it likely will, sooner or later).

One technical point for data nerds is the distinction between “generative” and “discriminative” approaches in modeling. This is not treated in the article below, but see here.

All text below the line of asterisks is from Generative AI Defined: How it Works, Benefits and Dangers, by Owen Hughes, Aug 7, 2023.

*******************************************************

What is generative AI in simple terms?

Generative AI is a type of artificial intelligence technology that broadly describes machine learning systems capable of generating text, images, code or other types of content, often in response to a prompt entered by a user.

Generative AI models are increasingly being incorporated into online tools and chatbots that allow users to type questions or instructions into an input field, upon which the AI model will generate a human-like response.

How does generative AI work?

Generative AI models use a complex computing process known as deep learning to analyze common patterns and arrangements in large sets of data and then use this information to create new, convincing outputs. The models do this by incorporating machine learning techniques known as neural networks, which are loosely inspired by the way the human brain processes and interprets information and then learns from it over time.

To give an example, by feeding a generative AI model vast amounts of fiction writing, over time the model would be capable of identifying and reproducing the elements of a story, such as plot structure, characters, themes, narrative devices and so on.

……

Examples of generative AI

…There are a variety of generative AI tools out there, though text and image generation models are arguably the most well-known. Generative AI models typically rely on a user feeding it a prompt that guides it towards producing a desired output, be it text, an image, a video or a piece of music, though this isn’t always the case.

Examples of generative AI models include:

- ChatGPT: An AI language model developed by OpenAI that can answer questions and generate human-like responses from text prompts.

- DALL-E 2: Another AI model by OpenAI that can create images and artwork from text prompts.

- Google Bard: Google’s generative AI chatbot and rival to ChatGPT. It’s trained on the PaLM large language model and can answer questions and generate text from prompts.

- Midjourney: Developed by San Francisco-based research lab Midjourney Inc., this gen AI model interprets text prompts to produce images and artwork, similar to DALL-E 2.

- GitHub Copilot: An AI-powered coding tool that suggests code completions within the Visual Studio, Neovim and JetBrains development environments.

- Llama 2: Meta’s open-source large language model can be used to create conversational AI models for chatbots and virtual assistants, similar to GPT-4.

- xAI: After funding OpenAI, Elon Musk left the project in July 2023 and announced this new generative AI venture. Little is currently known about it.

Types of generative AI models

There are various types of generative AI models, each designed for specific challenges and tasks. These can broadly be categorized into the following types.

Transformer-based models

Transformer-based models are trained on large sets of data to understand the relationships between sequential information, such as words and sentences. Underpinned by deep learning, these AI models tend to be adept at NLP [natural language processing] and understanding the structure and context of language, making them well suited for text-generation tasks. ChatGPT-3 and Google Bard are examples of transformer-based generative AI models.

Generative adversarial networks

GANs are made up of two neural networks known as a generator and a discriminator, which essentially work against each other to create authentic-looking data. As the name implies, the generator’s role is to generate convincing output such as an image based on a prompt, while the discriminator works to evaluate the authenticity of said image. Over time, each component gets better at their respective roles, resulting in more convincing outputs. Both DALL-E and Midjourney are examples of GAN-based generative AI models…

Multimodal models

Multimodal models can understand and process multiple types of data simultaneously, such as text, images and audio, allowing them to create more sophisticated outputs. An example might be an AI model capable of generating an image based on a text prompt, as well as a text description of an image prompt. DALL-E 2 and OpenAI’s GPT-4 are examples of multimodal models.

What is ChatGPT?

ChatGPT is an AI chatbot developed by OpenAI. It’s a large language model that uses transformer architecture — specifically, the “generative pretrained transformer”, hence GPT — to understand and generate human-like text.

What is Google Bard?

Google Bard is another example of an LLM based on transformer architecture. Similar to ChatGPT, Bard is a generative AI chatbot that generates responses to user prompts.

Google launched Bard in the U.S. in March 2023 in response to OpenAI’s ChatGPT and Microsoft’s Copilot AI tool. In July 2023, Google Bard was launched in Europe and Brazil.

…….

Benefits of generative AI

For businesses, efficiency is arguably the most compelling benefit of generative AI because it can enable enterprises to automate specific tasks and focus their time, energy and resources on more important strategic objectives. This can result in lower labor costs, greater operational efficiency and new insights into how well certain business processes are — or are not — performing.

For professionals and content creators, generative AI tools can help with idea creation, content planning and scheduling, search engine optimization, marketing, audience engagement, research and editing and potentially more. Again, the key proposed advantage is efficiency because generative AI tools can help users reduce the time they spend on certain tasks so they can invest their energy elsewhere. That said, manual oversight and scrutiny of generative AI models remains highly important.

Use cases of generative AI

Generative AI has found a foothold in a number of industry sectors and is rapidly expanding throughout commercial and consumer markets. McKinsey estimates that, by 2030, activities that currently account for around 30% of U.S. work hours could be automated, prompted by the acceleration of generative AI.

In customer support, AI-driven chatbots and virtual assistants help businesses reduce response times and quickly deal with common customer queries, reducing the burden on staff. In software development, generative AI tools help developers code more cleanly and efficiently by reviewing code, highlighting bugs and suggesting potential fixes before they become bigger issues. Meanwhile, writers can use generative AI tools to plan, draft and review essays, articles and other written work — though often with mixed results.

The use of generative AI varies from industry to industry and is more established in some than in others. Current and proposed use cases include the following:

- Healthcare: Generative AI is being explored as a tool for accelerating drug discovery, while tools such as AWS HealthScribe allow clinicians to transcribe patient consultations and upload important information into their electronic health record.

- Digital marketing: Advertisers, salespeople and commerce teams can use generative AI to craft personalized campaigns and adapt content to consumers’ preferences, especially when combined with customer relationship management data.

- Education: Some educational tools are beginning to incorporate generative AI to develop customized learning materials that cater to students’ individual learning styles.

- Finance: Generative AI is one of the many tools within complex financial systems to analyze market patterns and anticipate stock market trends, and it’s used alongside other forecasting methods to assist financial analysts.

- Environment: In environmental science, researchers use generative AI models to predict weather patterns and simulate the effects of climate change

….

Generative AI vs. machine learning

As described earlier, generative AI is a subfield of artificial intelligence. Generative AI models use machine learning techniques to process and generate data. Broadly, AI refers to the concept of computers capable of performing tasks that would otherwise require human intelligence, such as decision making and NLP.

Machine learning is the foundational component of AI and refers to the application of computer algorithms to data for the purposes of teaching a computer to perform a specific task. Machine learning is the process that enables AI systems to make informed decisions or predictions based on the patterns they have learned.

( Again, to make sure credit goes where it is due, the text below the line of asterisks above was excerpted from Generative AI Defined: How it Works, Benefits and Dangers, by Owen Hughes).