The historical US Census roles of disability among free persons are a mess. Specifically for the 1850-1870 censuses, the census bureau was not professionalized and the pay was low (a permanent office wasn’t founded until 1902). So, the enumerators were temporary employees and weren’t experts of their art. To boot, their handwriting wasn’t always crystal clear. Second, training for disability enumeration was even less complete and enumerators did their best with whom they encountered and how they understood the instructions. Finally, the digitized data in IPUMS doesn’t perfectly match the census reports. What a mess.

Guilty by Association

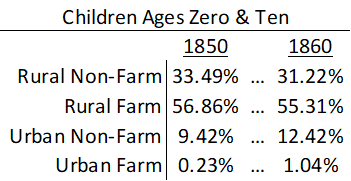

Disabled people and their families often misreported their status out of embarrassment or shame. Given that enumerators had quotas to fill, they were generally not inclined to investigate claimed statuses strenuously. Furthermore, disabled people were humans and not angels. Sometimes they themselves didn’t want to be associated with other types of disabled people. In particular, the disability designation in question (13) on the 1850 census questionnaire asked “Whether deaf and dumb, blind, insane, idiotic, pauper or convict”. Saying “yes” may put you in company that you don’t prefer to keep.

Summer censuses also sometimes missed deaf students who were traveling to or from a residential school.

Enumerator Discretion

The enumerator’s job was to write the disability that applied. What counts as deaf and dumb? That’s largely at the enumerator’s discretion. Some enumerators wrote ‘deaf’ even though that wasn’t an option. Was that shorthand for ‘Deaf and Dumb’? Or were they specifying that the person was deaf only and not dumb? We don’t know. But we do know that they didn’t follow the instructions. What if a person was both insane and blind? Then what should be written? “Blind/Insane” or “Blind and Insane” or “In-B” and any number of combinations were written. Some of them are easier to read than others.

Data Reading Errors

IPUMS is the major resource for using census data. The historical data was entered by foreign data-entry workers who didn’t always speak English. So, the records aren’t perfect. Some of the records are corroborated with Optical Character Recognition (OCR), but the historical script is sometimes hard to read. Finally, the fine folks at familysearch.org and Brigham Young University have used Church of Latter Day Saints (LDS) volunteers to proof data entries. Regardless, we know that the IPUMS data isn’t perfect and that the disability data is far from perfect. Usually, reports don’t dwell on it. They simply say that the data is incomplete.

The disability data is incomplete for a lot of reasons related to the respondent, the enumerator, the instructions, and the digital data creation. What a mess.